Brief Research Overview

Robot learning is a field of artificial intelligence focused

on algorithms that allow robots to learn to perform tasks more

intelligently. It is currently receiving significant attention

from the scientific community. The SMART Lab is focusing on

advanced deep learning and deep reinforcement learning methods

with the goal of improving the practicality of these methods

through learning from and more flexible interaction with

humans. We are specifically studying cognitive computing

methods to improve a robot's decision-making ability by

modeling and learning the fast and accurate decision-making

abilities of humans, and recognition methods to enable robots

to recognize and judge the identity of an object/scene in

real-time even in dynamic environments and with limited

information.

You can learn more about our current and past research on robot learning below.

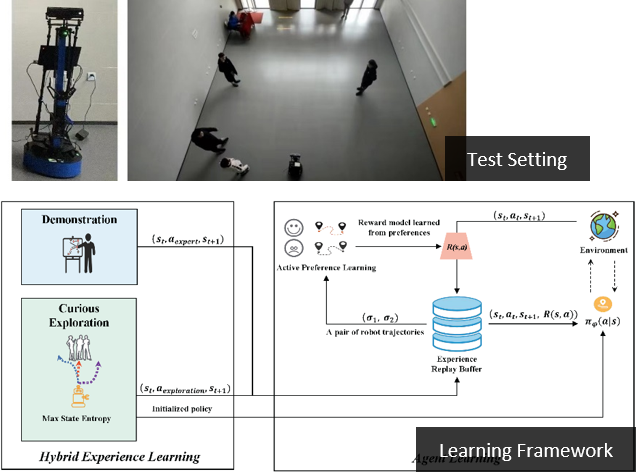

Socially-Aware Robot Navigation (2021 -

Present)

Description: Socially aware robot

navigation, in which a robot must optimize its trajectory to

maintain comfortable and compliant spatial interactions with

humans while also reaching its goal without collisions, is a

fundamental but challenging task in the context of human-robot

interaction. While existing learning-based methods have

performed better than model-based ones, they still have

drawbacks: reinforcement learning relies on handcrafted

rewards that may not effectively quantify broad social

compliance and can lead to reward exploitation problems, and

inverse reinforcement learning requires expensive human

demonstrations. The SMART Lab investigates various practical

and theoretical robot learning topics in the context of robot

navigation. For example, we recently proposed a

feedback-efficient active preference learning (FAPL) approach

for socially aware robot navigation, which translates human

comfort and expectation into a reward model that guides the

robot agent to explore latent aspects of social compliance.

The proposed method improved the efficiency of human feedback

and samples through the use of hybrid experiential learning,

and we evaluated the benefits of the robot behaviors learned

from FAPL through extensive experiments.

Grant: NSF

People: Ruiqi

Wang, Weizheng

Wang

Project Website: https://sites.google.com/view/san-fapl;

https://sites.google.com/view/san-navistar

Selected Publications:

- Weizheng Wang, Ruiqi Wang, Le Mao, and Byung-Cheol Min, "NaviSTAR: Benchmarking Socially Aware Robot Navigation with Hybrid Spatio-Temporal Graph Transformer and Active Learning", 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023), Detroit, USA, October 1-5, 2023. Paper Link, Video Link, GitHub Link

- Ruiqi Wang, Weizheng Wang, and Byung-Cheol Min, "Feedback-efficient Active Preference Learning for Socially Aware Robot Navigation", 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), Kyoto, Japan, October 23-27, 2022. Paper Link, Video Link, GitHub Link

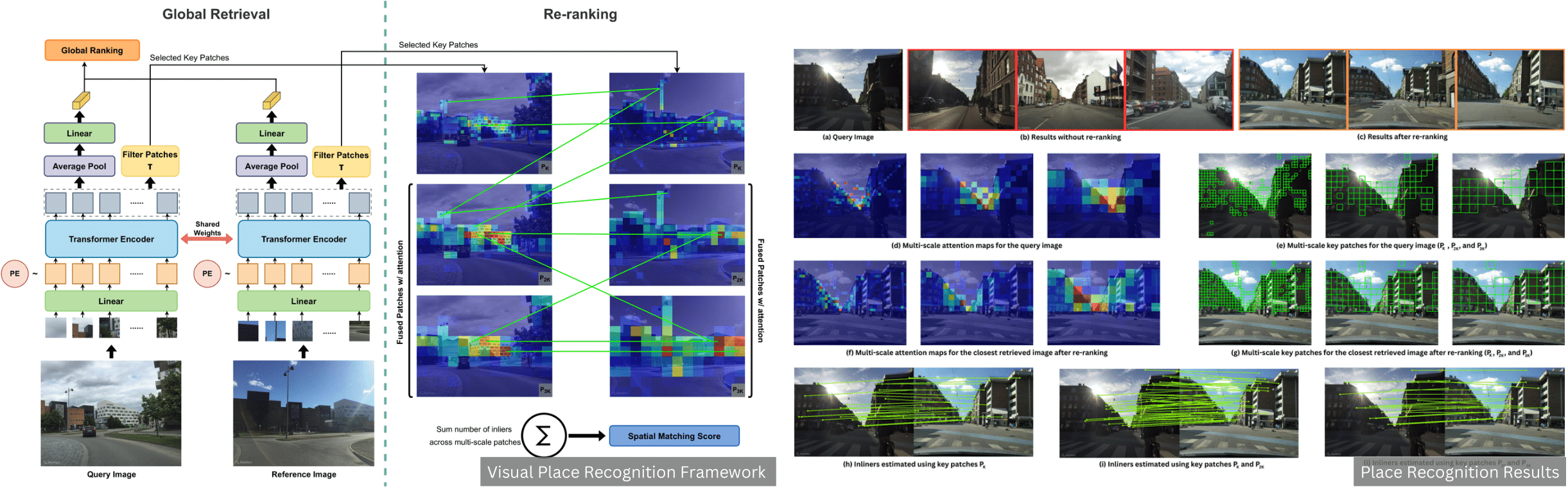

Visual Localization and Mapping (2022 -

Present)

Description: Visual localization enables autonomous vehicles

and robots to navigate based on visual observations of their operating environment.

In visual localization, the agent estimates its pose based on the image from the camera.

The operating environment of the agent can undergo various changes due to illumination,

day and night, seasons, structural changes, and so on. In vision-based localization,

it is important to adapt to these changes that can significantly impact visual perception.

The SMART lab investigates into developing methods that enable autonomous agents to robustly

localize despite these changes in the surroundings. For example, we developed a visual place

recognition system that aids the autonomous agent in identifying its location on a large-scale

map by retrieving a reference image that matches closely with the query image from the camera.

The prposed method utilizes consice descriptors from the image, so that the image process can be

done rapidly with less memory consumption.

Grant: Purdue University

People: Shyam Sundar Kannan

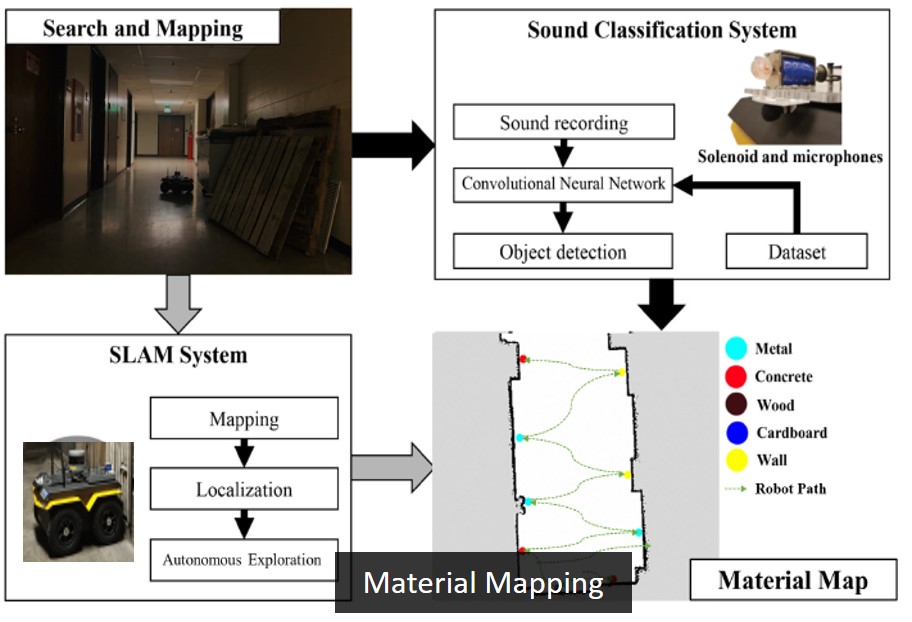

Learning-based Robot Recognition (2017 -

Present)

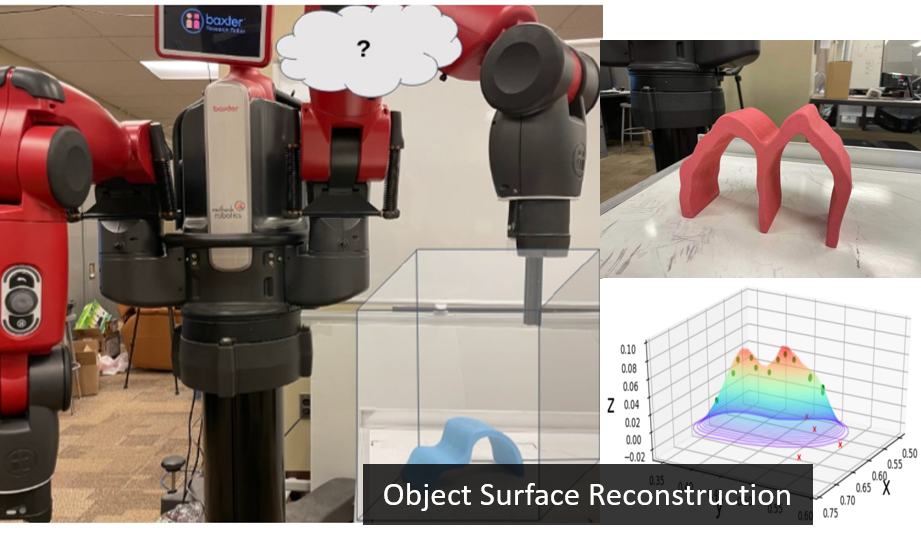

Description:The SMART Lab is researching

learning-based robot recognition technology to enable robots

to recognize and identify objects/scenes in real-time with

the same ease as humans, even in dynamic environments and

with limited information. We aim to apply our research and

developments to a variety of applications, including the

navigation of autonomous robots/cars in dynamic

environments, the detection of malware/cyberattacks, object

classification and reconstruction, the prediction of the

cognitive and affective states of humans, and the allocation

of workloads within human-robot teams. For example, we

developed a system in which a mobile robot autonomously

navigates an unknown environment through simultaneous

localization and mapping (SLAM) and uses a tapping mechanism

to identify objects and materials in the environment. The

robot taps an object with a linear solenoid and uses a

microphone to measure the resulting sound, allowing it to

identify the object and material. We used convolutional

neural networks (CNNs) to develop the associated

tapping-based material classification system.

Grants: NSF, Purdue University

People: Wonse

Jo, Shyam

Sundar Kannan, Go-Eum

Cha, Vishnunandan

Venkatesh, Ruiqi

Wang

Selected Publications:

- Su Sun and Byung-Cheol Min, "Active Tapping via Gaussian Process for Efficient Unknown Object Surface Reconstruction", 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Workshop on RoboTac 2021: New Advances in Tactile Sensation, Interactive Perception, Control, and Learning. A Soft Robotic Perspective on Grasp, Manipulation, & HRI, Prague, Czech Republic, Sep 27 – Oct 1, 2021. Paper Link

- Shyam Sundar Kannan, Wonse Jo, Ramviyas Parasuraman, and Byung-Cheol Min, "Material Mapping in Unknown Environments using Tapping Sound", 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2020), Las Vegas, NV, USA, 25-29 October, 2020. Paper Link, Video Link

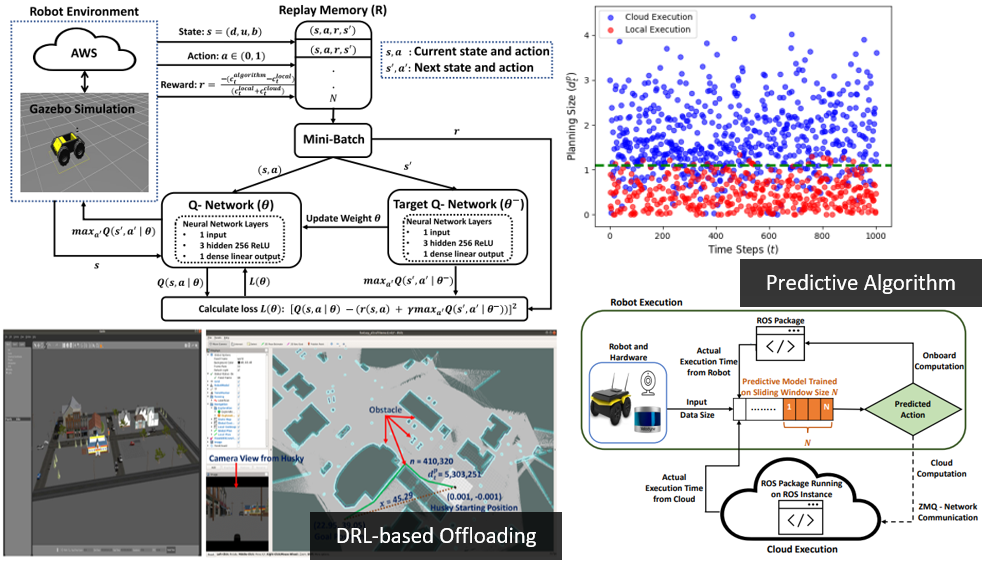

Application Offloading Problem (2018 -

22)

Description: Robots come with a variety

of computing capabilities, and running

computationally-intensive applications on robots can be

challenging due to their limited onboard computing, storage,

and power capabilities. Cloud computing, on the other hand,

provides on-demand computing capabilities, making it a

potential solution for overcoming these resource

constraints. However, effectively offloading tasks requires

an application solution that does not underutilize the

robot's own computational capabilities and makes decisions

based on cost parameters such as latency and CPU

availability. In this research, we address the application

offloading problem: how to design an efficient offloading

framework and algorithm that optimally uses a robot's

limited onboard capabilities and quickly reaches a consensus

on when to offload without any prior knowledge of the

application. Recently, we developed a predictive algorithm

to predict the execution time of an application under both

cloud and onboard computation, based on the size of the

application's input data. This algorithm is designed for

online learning, meaning it can be trained after the

application has been initiated. In addition, we formulated

the offloading problem as a Markovian decision process and

developed a deep reinforcement learning-based Deep Q-network

(DQN) approach.

Grants: Purdue University

People: Manoj Penmetcha , Shyam Sundar Kannan

Selected Publications:

- Manoj Penmetcha and Byung-Cheol Min, "A Deep Reinforcement Learning-based Dynamic Computational Offloading Method for Cloud Robotics", IEEE Access, Vol. 9, pp. 60265-60279, 2021. Paper Link, Video Link

- Manoj Penmetcha, Shyam Sundar Kannan, and Byung-Cheol Min, "A Predictive Application Offloading Algorithm using Small Datasets for Cloud Robotics", 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Virtual, Melbourne, Australia, 17-20 October, 2021. Paper Link, Video Link